Some organizations use wildcard certificates. The problem with these certificates is that they start to lead a life on it’s own. So when the time comes that such a certificate is about to expire one can wonder where is this certificate installed? Luckily we have Powershell to save us from having to open all certificate stores on all servers in the domain. At first I was thinking maybe the easiest way could be to have a Powershell remoting session. But that would require all servers to have Powershell remoting enabled. Unfortunately not all servers have it enabled or have a reasonable Powershell version to start with. So just for documenting purpose I’ll write down the command to find a certificate.

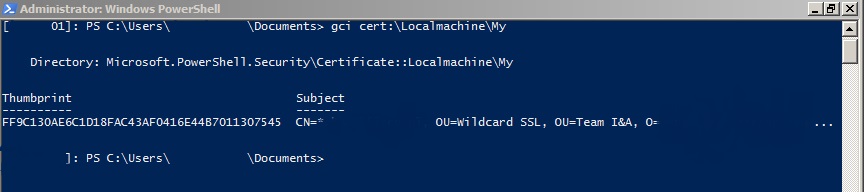

A certificate has a thumbprint. This thumbprint is equal on all servers as it part of the certificate, much like the serial number. So you can easily find the thumbprint where your looking for running the command on a server where the certificate is present.

Just copy the thumbprint and enter it into the command to find the certificate like the on below

Invoke-Command {gci cert:\Localmachine\My | ? {$_.Thumbprint -eq "FF9C130AE6C1D18FAC43AF0416E44B7011307545"}} -ComputerName #COMPUTERNAME#

Replace #COMPUTERNAME# for the computername you would want to question. Now this is all good but like I mentioned before I would want to find certificate regardless of Operation system contstrains or whether Powershell Remoting is enabled or not. So this script would process that accordingly. Change the thumbprint to the thumbprint of your certificate. The script export all servers where the certificate with the given thumbprint is present. Please feel free to modify this to your needs.

Function Get-Cert( $computer=$env:computername ){

#Sets ReadOnly flag upon external Certificate store.

$ro=[System.Security.Cryptography.X509Certificates.OpenFlags]"ReadOnly"

#Opens LocalMachine store. You could potentially enter a different Cert store.

$lm=[System.Security.Cryptography.X509Certificates.StoreLocation]"LocalMachine"

#Opens personal certificate store from local machine.

$store=new-object System.Security.Cryptography.X509Certificates.X509Store("\\$computer\My",$lm)

#Establish connection with remote PC

$store.Open($ro)

#Display certificates in console or variable

$store.Certificates

}

#Opens list

$Thumbprint = "FF9C130AE6C1D18FAC43AF0416E44B7011307545"

$List = @()

#Servers reset their machine password within a specific timeframe

$Servers = Get-ADComputer -Filter * -Properties OperatingSystem,PasswordLastSet | ?{$_.OperatingSystem -match "Server" -and $_.PasswordLastSet -gt (Get-Date).AddDays(-60)}

Foreach ($Server in $Servers)

{

$Server.DNSHostName

If ((Get-Cert $Server.DNSHostname | ? {$_.Thumbprint -eq $Thumbprint}).Count -ne 0)

{

$List += $Server.DNSHostName

}

}

$List | % {AC -Value $_ -Path ".\ComputersWithCertificate.txt"}